Replaying previous experiences in the brain helps us link past rewards to possible future decisions

Cells in the brain replay previous events to help predict the outcome of possible future experiences, according to a recent study by researchers at the UCL – Max Planck Centre for Computational Psychiatry, and Wellcome Centre for Human Neuroimaging.

The findings, published in Science, show for the first time that a process called neural replay enables a person to generalise the outcome value of a previous experience to possible future events, using a learned mental map of their surroundings.

This offers new insights into how the brain updates memories based upon local experience and exploits a mental model of the world to generalise this experience. The paper connects several findings from rodents with human neuroscience data for the first time.

To make effective decisions, a person needs to consider information from previous experiences to guide their subsequent choices. This requires them to consider the relationship between actions and outcomes. However, this can be difficult when actions and outcomes are separated by space or time.

One theory is that humans use a mental model of their environment to link previous outcomes to future actions, in a process known as experience replay.

“Imagine you’re travelling to a destination and you’re stuck in traffic because of a huge tail-back at a junction,” explains Professor Ray Dolan, director of the Max Planck UCL Centre for Computational Psychiatry and Ageing Research and Principle Investigator of the WCHN’s Cognition and Computational Psychiatry team. “Without actually experiencing other routes that converge on the same junction, you can infer these will also be bad.”

Previous studies have shown in rodents that cells in the hippocampus fire in an organised manner during rest, running through past or potential future experiences.

Scientists have observed similar phenomena in humans, but until now have been unable to demonstrate a direct link between so-called neural replay and model-based adaptive learning.

The team tested this theory by designing a decision-making task with three possible arms. In each arm, participants had to choose between two paths which each led to a distinct outcome: for example, £1 or £0. The probability of each pathway carrying a given outcome changed gradually over multiple trials.

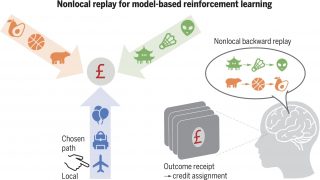

Importantly, these two possible outcomes were the same across all three arms, meaning reward feedback from one pathway could inform future decisions – not only in the chosen arm, but also either of the other two arms.

As expected, the researchers found that people were more likely to repeat the same path if they had been rewarded at the end of that path on a previous trial in the same (local) arm.

However, this effect was maintained even when the choice was presented at a different (nonlocal) arm of the decision-making task, indicating that participants had used reward information to inform future choices that were separated from that reward by space and time.

The researchers also used machine learning combined with magnetoencephalography (MEG) technology to map participants’ brain activity while they completed the task. MEG records magnetic fields produced by electrical currents in the brain, showing which cells are active. This allowed the researchers to search for signs of neural replay.

They found evidence of two physiologically-distinct types of neural replay. The first, forward replay, follows the same direction as the experience itself (local experience). The second, backward replay occurs in the opposite direction to the previous experience and is seen when updating the value of a non-local experience.

Interestingly, the backward neural replay recapitulated the pattern of contents of nonlocal pathways, implying that the brain assigns a reward value to as yet unseen pathways by replaying the pathways that will lead to a reward. It fits with the idea that neural replay drives learning via inference, as opposed to simply supporting learning from direct experience.

What’s more, the patterns of backward neural replay tended to represent the nonlocal path that would result in the biggest expected reward increase from making better decisions if that experience is replayed. In other words, the brain prioritises replay of experiences according to their usefulness for future choices.

Prof. Dolan is pleasantly surprised at how closely this finding fits with a theory of ‘prioritised replay’:

“When theory and empirical findings converge it is very satisfying. There are lots of theories in neuroscience, some would say too many, but not so many examples of theory and empirical data saying the same thing.”

The study shines a new light on how the brain builds and continually updates mental models of the world. Understanding this is also crucial for investigating what happens where our mental models of the world break down, which plays a role in some psychiatric disorders.

Prof. Dolan and the team, including his PhD student Yunzhe Liu the lead author on the current study, are already engaged in research into the role of replay in schizophrenia, with a new study due to be published in Cell soon.